In a world where genuine companionship feels increasingly rare, Roy & Rachel explored the potential of technology providing real emotional connection. I created an emotive animatronic face designed to listen, process, and then respond to emotions through facial expressions. This project aimed to deepen the relationship between human and machine, making interactions with technology feel more natural and intimate.

ROY, A TOY

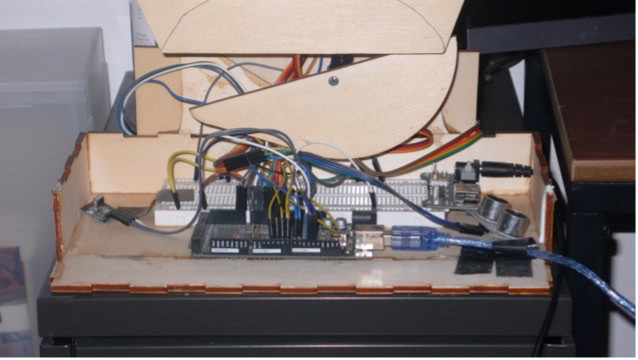

The face began with a mechatronic plywood model I designed and built. Initially named Roy, it uses servo motors to animate key facial features to convey a wide range of facial expressions. These are programmed via C++ on an Arduino. Feature movement could be controlled smoothly with joysticks or triggered from a set of pre-programmed expressions via an infrared remote, as a playful way to explore Roy, as a toy.

Roy demonstration - Joystick interaction

RACHEL, A FRIEND

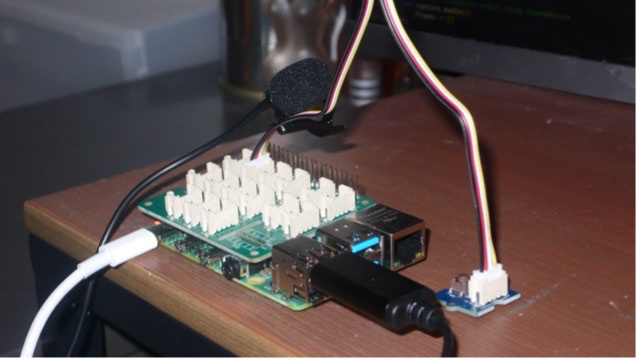

Later, I transformed Roy into Rachel, an Internet of Things device. To make the interaction more natural, users speak to Rachel, who listens and responds with corresponding emotions.

Rachel demonstration - Speech interaction

RACHEL'S DEVELOPMENT

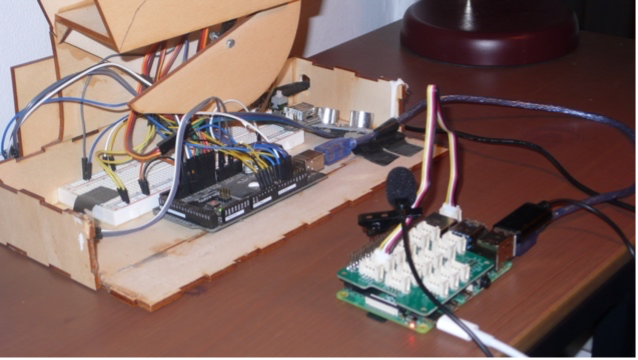

ARCHITECTURE

A microphone records the speech, a REST API converts it to text, and a second API with a custom machine learning model I built, detects the emotion and translates it into commands for Rachel to relay into expressions. This architecture was built with python on a raspberry pi.